Legendary programmer and co-founder of id Software, recently shared his thoughts on NVIDIA’s latest and smallest supercomputer, the DGX Spark. And…well, it’s not great.

In a post on X, Carmack says that his DGX Spark unit was found to be drawing around 100W, less than half of its touted 240W rating, and achieved about 480 TFLOPS in FP4, or roughly 60 TFLOPS in BF16 workloads. As impressive as that sounds, it’s still less than half of its expected output.

“It gets quite hot even at this level, and I saw a report of spontaneous rebooting on a long run, so was it de-rated before launch?”

Carmack wasn’t alone in his experience with the DGX Spark. Awni Hannun, also chimed in on Carmack’s findings, cnfirming that his machine was only getting around 60 TFLOPs at BF16, and was expecting higher. That’s number, by the way, is far less than NVIDIA’s claim that the mini supercomputer is capable of achieving 1 PFLOPS of performance. Some experts believe that the 1 PFLOPS claim is based on structured sparsity, which is a hardware feature that skips zero-value operations in neural networks, while effectively double its theoretical compute rate.

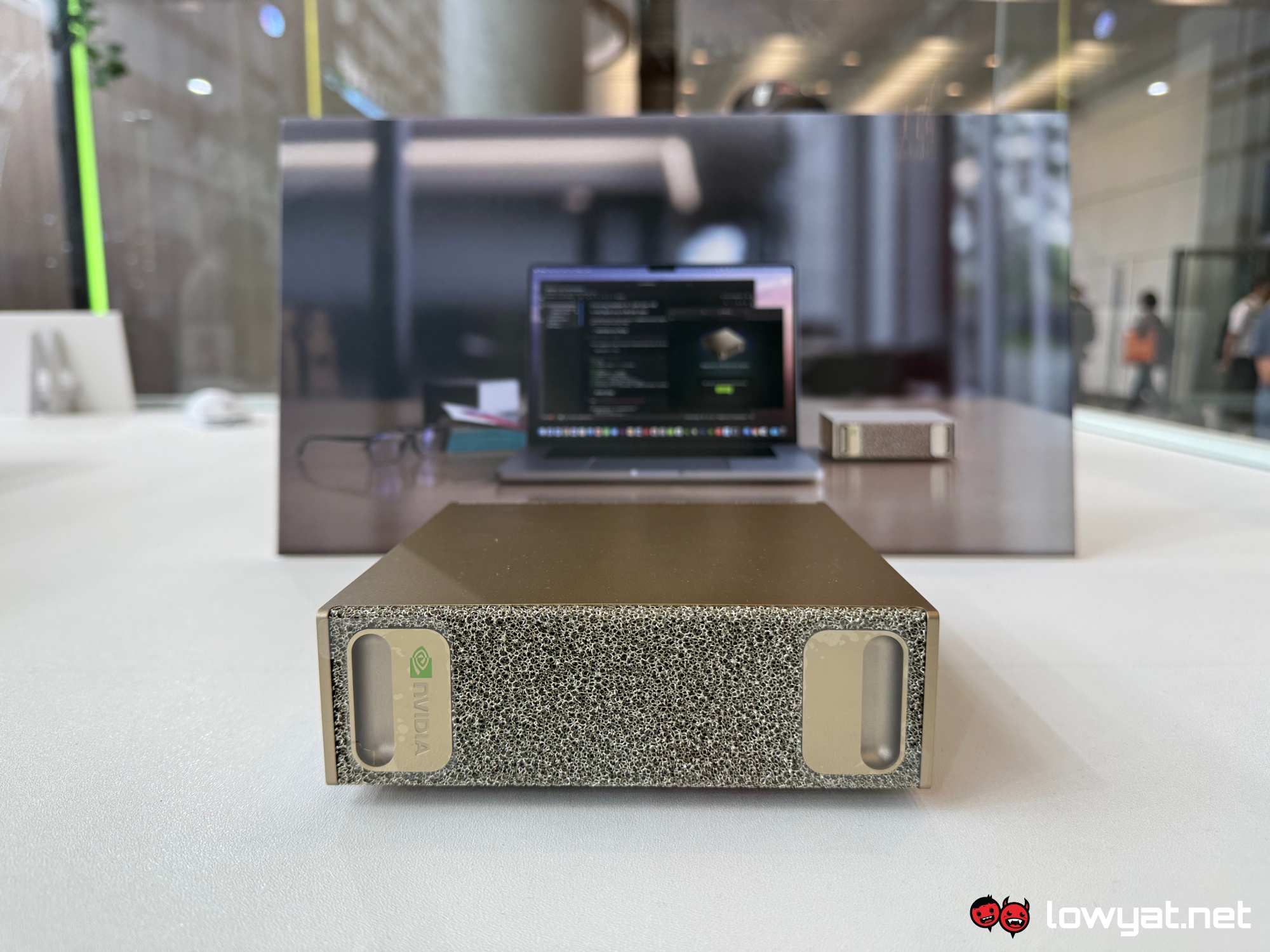

The NVIDIA DGX Spark went on sale earlier this week, and retails for US$3,999 (~RM16,924). As per the official marketing page, the Spark uses a Grace Blackwell GB10 superchip, features 128GB of Unified System memory, has 1 petaflop of FP4 AI performance, and requires just 240W to run. Versus the 3,200W power requirement of its predecessor. Additionally, it also feature’s NVIDIA’s ConnectX-7 200GB/s networking and NVLink-C2C technology.

(Source: John Carmack via X, Videocardz)