Roblox will soon be rolling out a mandatory age verification system, requiring all users to provide an ID or a face scan to use the platform’s chat features. The policy will first take effect in Australia, New Zealand, and the Netherlands in December. Afterwards, the policy will expand to other markets early next year.

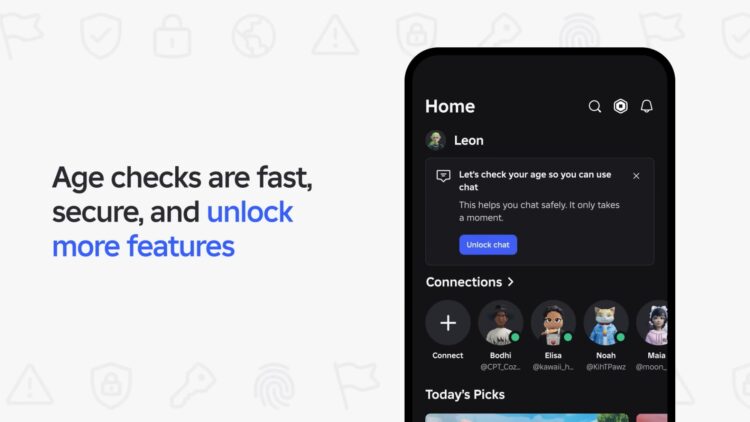

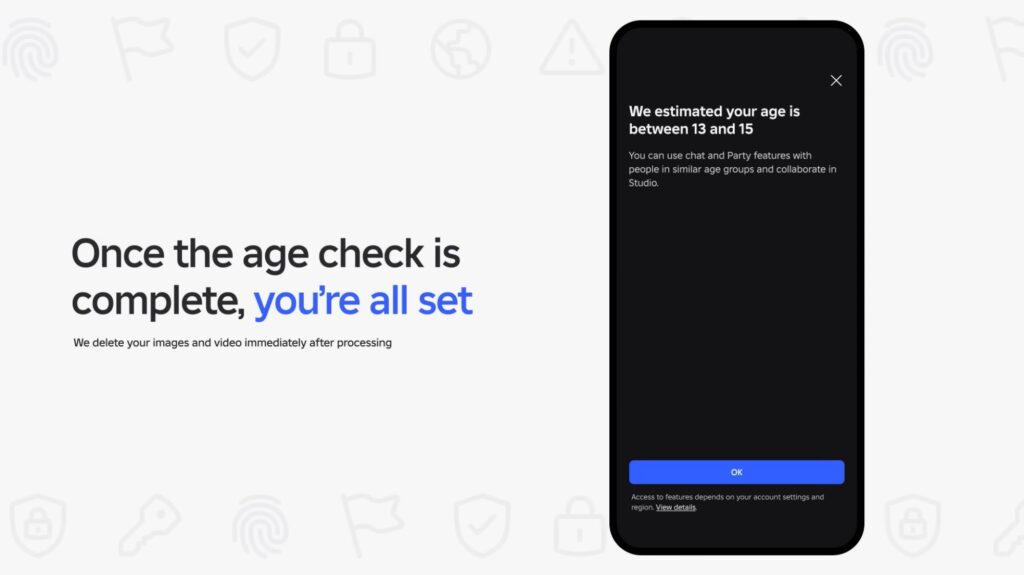

The company also explained the new “age-based chat” system that will prevent users from interacting with other users outside of their age range. Once a user has submitted their ID or a face scan to the system, Roblox will then categorise them into a specific age group. For reference, there are six age groups, which are: Under 9, 9 – 12, 13 – 15, 16 – 17, 18 – 20, and 21+.

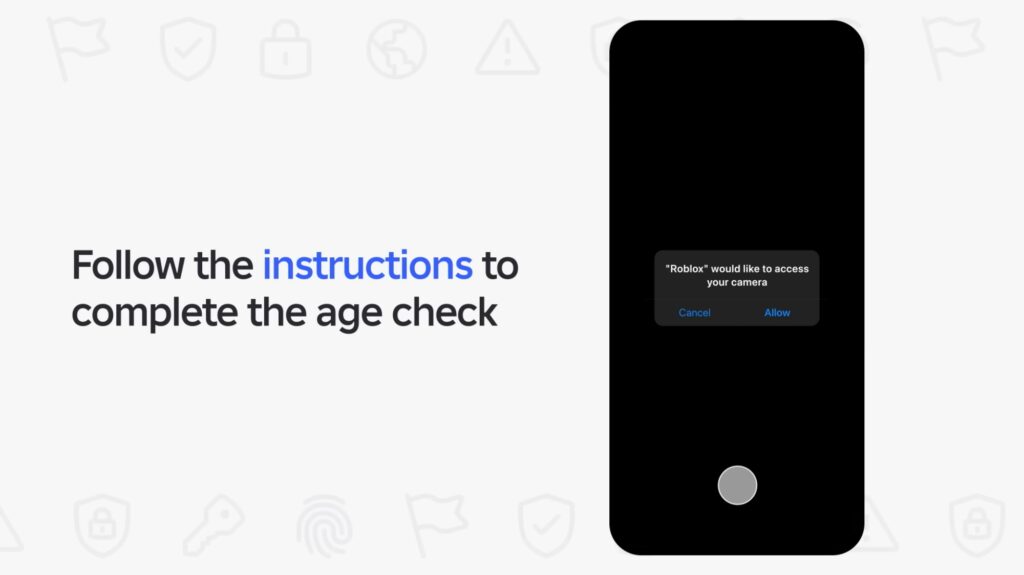

For players with a valid ID, a quick scan should suffice to access the game’s chat features. But for those without one, such as children, the system uses what the company calls Facial Age Estimation to determine their age.

This tech, provided by identity company Persona, will check the submitted face scan and estimate the user’s age. Roblox also said it will immediately delete the face scan once it processes it.

Unfortunately, Roblox did not explain how accurate its age estimation features are. However, Matt Kaufman, the company’s Chief Safety Officer, said that it was “pretty accurate”. It’s also worth mentioning that the company also plans to impose age restrictions on accessing external social media links and using Roblox Studio.

If you’ve been following the popular gaming platform, you’re likely aware of the recent issues that have made it quite infamous. Both local and international government bodies have questioned Roblox about how it enforces child safety on the platform.

Naturally, the effects of the Batu Pahat incident still linger in the public subconscious, to the point that the Malaysian government has considered banning the popular game. Even if the game isn’t banned, there is still the possibility of Roblox being required to obtain a licence to operate in Malaysia.

Only time will tell if this age verification feature can fix the problem. However, it is a step in the right direction to hopefully limit the possibility of children interacting with sensitive topics and information.