The AI boom in recent years is not without its costs. Aside from requiring enormous amounts of energy to function, these AI systems generate a ton of heat, making cooling a major concern as current methods struggle to keep up with the needs of these data centres. However, it seems like Microsoft may have achieved a breakthrough in chip cooling technology. Or at least, that is what the company claims.

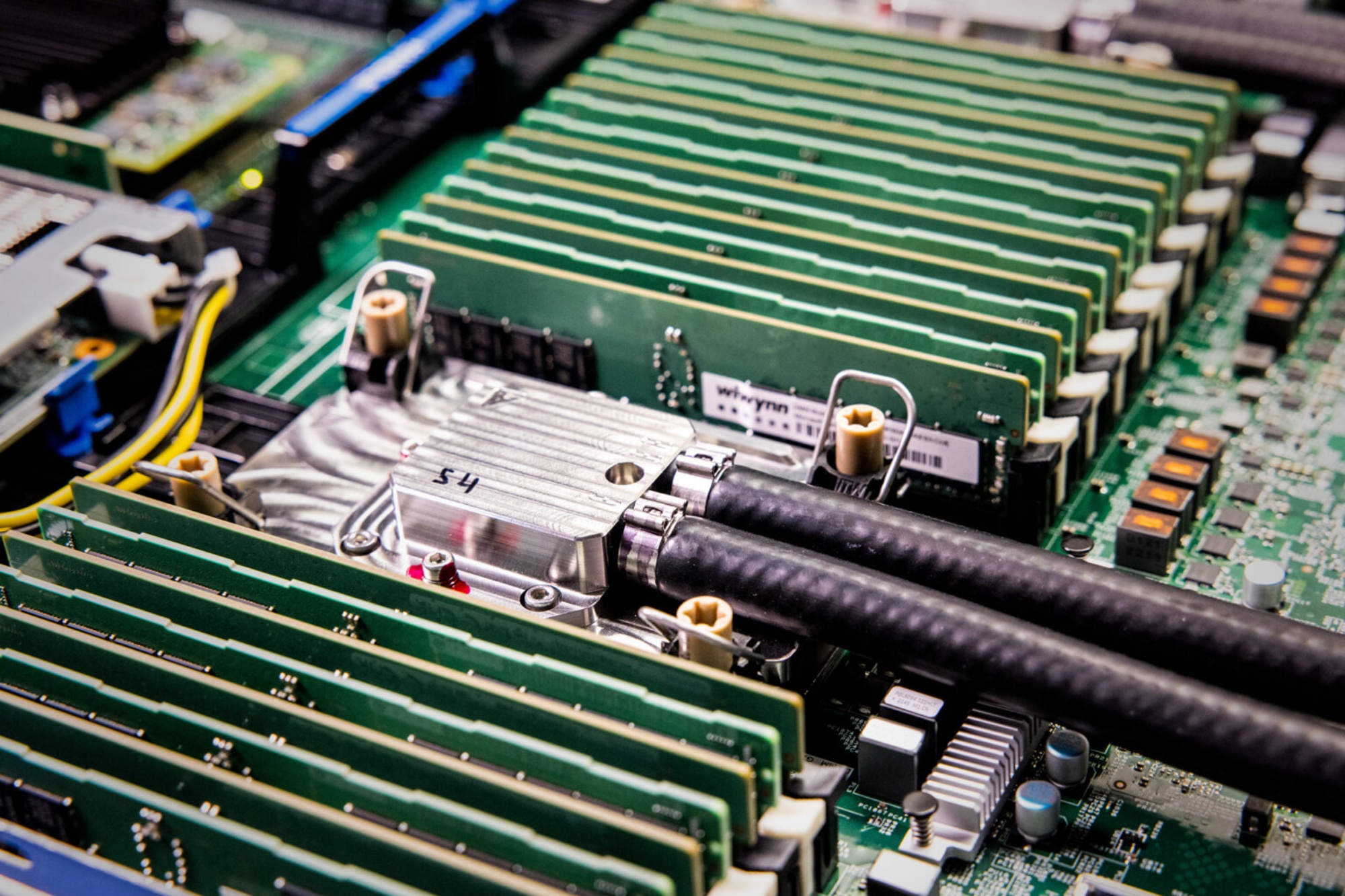

This new technology is based on microfluidics, which involves directing the coolant to the heat source – the chip itself. The company’s prototype system uses tiny channels etched onto the back of the chip. These grooves allow liquid coolant to flow directly onto the silicon, thus more efficiently removing heat.

Furthermore, Microsoft’s research team relied on AI in designing this solution. In addition to using AI to identify the hot spots on the chip, the technology was used to help optimise a nature-inspired design for the channels. These channels, which are similar in size to a human hair, resemble the veins in a butterfly wing, or a leaf.

As for the effectiveness of this design, Microsoft claims that its lab tests proved that microfluidic cooling is three times better at removing heat than cold plates, which are currently being used in most data centres. Aside from that, the maximum temperature of the silicon inside a GPU can be reduced by up to 65%. The company notes that this will vary depending on the type of chip.

Microsoft’s exploration of microfluidics demonstrates the technique’s potential for improving performance and efficiency. However, this is only the first step in the tech giant’s long-term goals. The company envisions completely new chip architectures in the future, such as 3D chips.

(Source: Microsoft)