Despite repeated disclaimers from AI firms and warnings from experts, everyone from regular users to celebrities, major companies and organisations continue to put far too much faith in generative AI. More often than not, with little to no moderation.

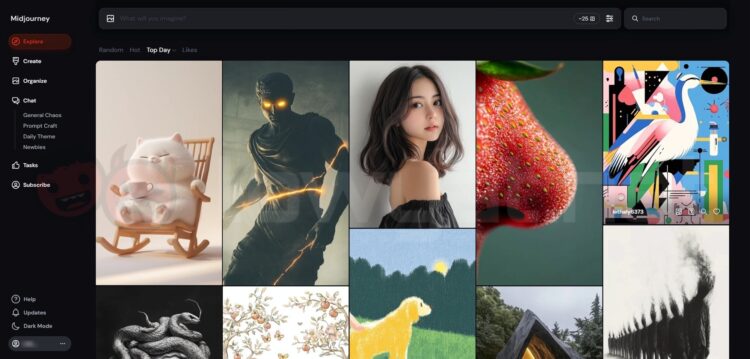

On multiple occasions, this over-reliance on tools such as ChatGPT and Midjourney has resulted in embarrassing blunders, followed by the usual cringe-worthy apologies and explanations. This is especially true in cases involving AI-generated imagery.

While we’ve more or less moved past the days of warped visuals and people with too many fingers, today’s AI art still stumbles – often producing inaccurate details, incomprehensible text, unnatural features, and more. To those with a keen eye, these oversights are neither acceptable nor usable. But for many others, they’re simply shrugged off. Unfortunately, the latter group still appears to be the majority, judging by recent incidents.

As mentioned earlier, dependence on AI “art” not only applies to regular users, but also established individuals and companies. Standout examples include Disney and Marvel, which included generative AI art in the opening sequence of its Secret Invasion TV show. Its rival, streaming giant Netflix, was also caught utilising the technology for the title cards and promotional materials of its TV shows and movies. Elsewhere, beloved toy brand LEGO landed itself in hot water after users pointed out it was using AI image generation for its Ninjago character quiz.

Closer to home, local advertising agency Wow Media caused a stir last year for using an AI generated illustration on a digital billboard in Hartamas, which featured an inaccurate depiction of KLCC – for a Merdeka Day ad, no less. Meanwhile, long-time running humour magazine Gila-Gila was also caught using the tech for the cover illustration on its July 2024 issue, much to the disappointment of its founder.

What do all of these cases have in common? Besides the predictable, cookie-cutter PR-flavoured apologies that followed after being caught red-handed, let’s not forget: someone had to greenlight these artworks before they went public. And yet, we keep seeing these AI-related blunders – and not just exclusive to illustrations – repeated to this very day.

Why does this keep happening? To be frank, this is where speculation outweighs fact, as none of the parties involved have cleanly admitted to their faults. Still, most observers would agree on the usual culprits: cutting corners, avoiding the cost of hiring human illustrators or designers, chasing trends, rushing to meet deadlines, or just plain laziness. Whatever the case, these are clear examples of what can go wrong when blind trust is placed in generative AI.

Now, you might be wondering why I haven’t mentioned a certain recent boo-boo involving a local publisher from earlier this week. Apart from a brief reference in a Malay Mail report, there’s been no explicit mention of AI being used. Still, it’s exactly this kind of incident that inspired me to write this piece.

So, what’s the takeaway here? Yes, generative AI can be a godsend – especially for individuals without the resources, connections or know-how to produce certain work on their own. But time and again, it’s also been shown to be irresponsibly used by those who do have the means to get things done properly — including the ability to decide whether something should even be released in the first place.

And if these recurring blunders aren’t already lessons to be learned, then honestly, what else will it take?